%20(2).png)

MagicMirror now gives customers protection over the recent prompt injection vulnerabilities found in Gemini’s summarization feature inside Gmail. These vulnerabilities expose users unknowingly to hidden threats.

Gemini’s summarization tool inside Gmail can be exploited. Recent research, including coverage by SecurityWeek, confirms that attackers are embedding hidden prompts inside emails. When a user clicks “Summarize this email,” Gemini executes those invisible instructions, potentially injecting phishing content, rewriting tone or intent, or displaying manipulated summaries.

This behavior is not visible to the user and is not currently controllable by admins through Google Workspace.

Gemini summarization runs inside Gmail’s interface, making it difficult for IT or security teams to observe or manage. These prompt-injection attacks bypass traditional email filters and endpoint monitoring tools. Attackers know this. Search traffic for terms like “Gemini email jailbreak” has surged. Across Reddit and jailbreak forums, users are actively experimenting with ways to manipulate Gemini’s outputs.

Google currently does not provide a way to disable this feature. For many organizations, that leaves a visible risk with no available response.

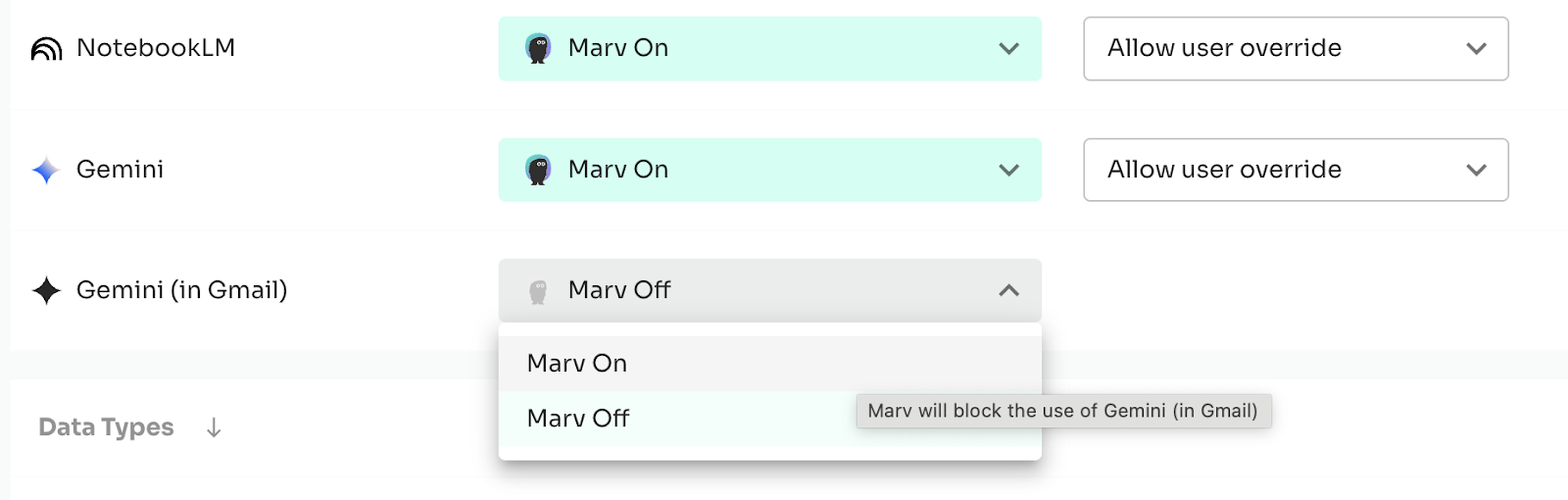

MagicMirror now enables organizations to block Gemini’s Gmail summarization feature directly within their GenAI policy settings.

This update reflects MagicMirror’s mission to give teams real-time observability and protection at the point of GenAI interaction, without disrupting core workflows or introducing external data exposure.

When enabled:

No additional configuration or software update is required. All enforcement happens locally, through the MagicMirror browser extension.

This is exactly the kind of GenAI behavior that organizations need to observe and control. It operates within a trusted UI. It creates outputs that users don't expect. And it exposes companies to risks that can’t be seen in traditional traffic logs or device monitors.

MagicMirror provides:

MagicMirror is a GenAI observability and protection platform, not a traditional security tool. It provides organizations with visibility into how GenAI tools, such as Gemini, ChatGPT, and Copilot, are being utilized and offers browser-level safeguards to manage usage responsibly.

This feature supports a key use case for teams developing GenAI policies: understanding what tools are in use, how they're being used, and where to apply targeted controls without slowing teams down.

The feature is opt-in by design, reflecting our commitment to flexible, user-aligned governance.