Anthropic has set a firm deadline: on September 28, 2025, Claude will begin using user interactions to train its AI models — unless you opt out.

This update affects all users of Claude Free, Pro, Max, and Claude Code. While enterprise and API-based offerings are not included for now, the shift signals what’s likely coming next: a broader erosion of default data privacy across GenAI platforms.

For organizations without centralized oversight, this means one thing: your company’s data may be used to train external models without your knowledge or consent.

And the risk doesn’t end with chat interactions. Anthropic is also piloting a Claude Chrome extension, giving the assistant access to browser content, including SaaS apps and internal tools. Few organizations currently have any controls in place to monitor or restrict this behavior.

Taken together, these changes mark a turning point. What began as consumer tools are now operating inside enterprise environments and often undetected.

This is the moment to regain control over GenAI usage inside your business.

Effective September 28, 2025, Claude will begin training on user inputs by default — unless the user opts out.

1. Model training is now on by default: New and resumed conversations are eligible for training. Dormant or deleted chats are excluded — unless reopened.

2. Retention period extended to five years: Claude will retain user interactions for up to 5 years if training is enabled. Opting out reverts to the previous 30-day retention standard.

3. The opt-out experience is easy to miss: A “Terms Update” screen includes a large “Accept” button and a small toggle to disable training. If no action is taken, users are opted in by default.

4. Applies only to consumer plans — for now: Free, Pro, Max, and Claude Code are affected. Claude for Work, Claude for Education, and API usage via Bedrock or Vertex AI are currently excluded.

5. No retroactive deletion: Opting out does not remove previously shared data that has already been used for training.

Alongside this policy change, Anthropic has begun testing a Claude browser assistant in Chrome. This assistant can read and act on the content of your active tab — including emails, websites, and internal dashboards.

This opens up new vectors for accidental exposure, cross-tab data access, and manipulation via prompt injection — all inside environments that most IT teams don’t currently monitor.

As VentureBeat reported, browser-based AI agents are now vulnerable to real-world prompt injection, where malicious instructions on a web page can override the model’s behavior — exfiltrating data or bypassing user intent entirely.

This shift from standalone chatbots to browser-integrated agents changes the security model entirely. And without visibility, you won't know it's happening.

Even if you haven’t formally deployed Claude, it’s likely already in use across your organization and is now more risky given the policy changes happening on consumer accounts that are likely being used by employees.

1. Data exposure

Employees may paste sensitive data into Claude, including source code, contract terms, customer information, or forecasts. If training is enabled, that content may be stored and reused.

2. Compliance gaps

A five-year data retention window may violate your organization’s compliance obligations under regulations like GDPR, CCPA, and SOC2.

3. Unmonitored browser access

The Claude Chrome extension introduces an untracked channel for accessing and processing internal data inside SaaS apps and browser sessions.

4. Shadow AI adoption

Consumer-tier tools are often used outside of formal IT controls. If you don’t know who’s using Claude or with what settings, you can’t protect your data.

MagicMirror is purpose-built to protect organizations from this exact class of GenAI risk, especially shadow AI usage and browser-based exposure.

1. Real-time, browser-level data interception

MagicMirror classifies and blocks sensitive inputs, including PII, PCI, source code, and regulated content — before they ever reach tools like Claude or ChatGPT.

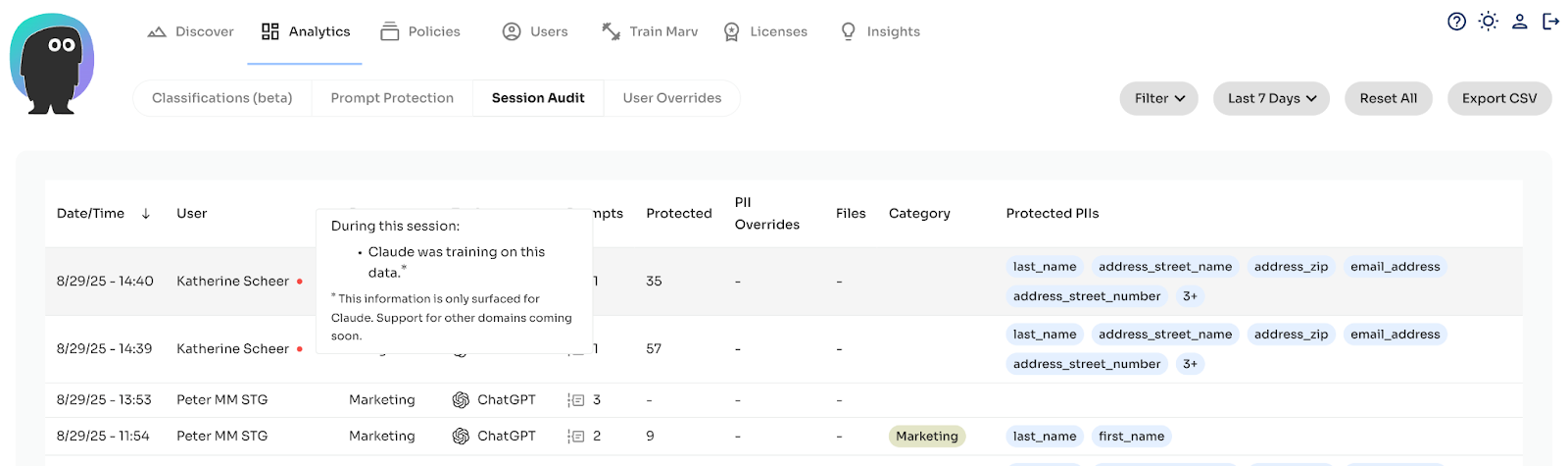

2. Visibility into tool usage and training settings

Our Session Audit shows which employees are using Claude, what they’re using it for, and whether “train on my data” is enabled giving IT and Security the visibility they’ve never had before.

3. Prompt injection and DOM-level monitoring

MagicMirror watches for injected prompts and suspicious model behavior in the browser including extensions like Claude’s Chrome assistant.

4. Local-first architecture

All protections are enforced on-device. Nothing is routed through MagicMirror servers, and no data ever leaves the user's machine.

To prepare for Claude’s policy changes and the broader shift toward embedded GenAI agents take the following steps:

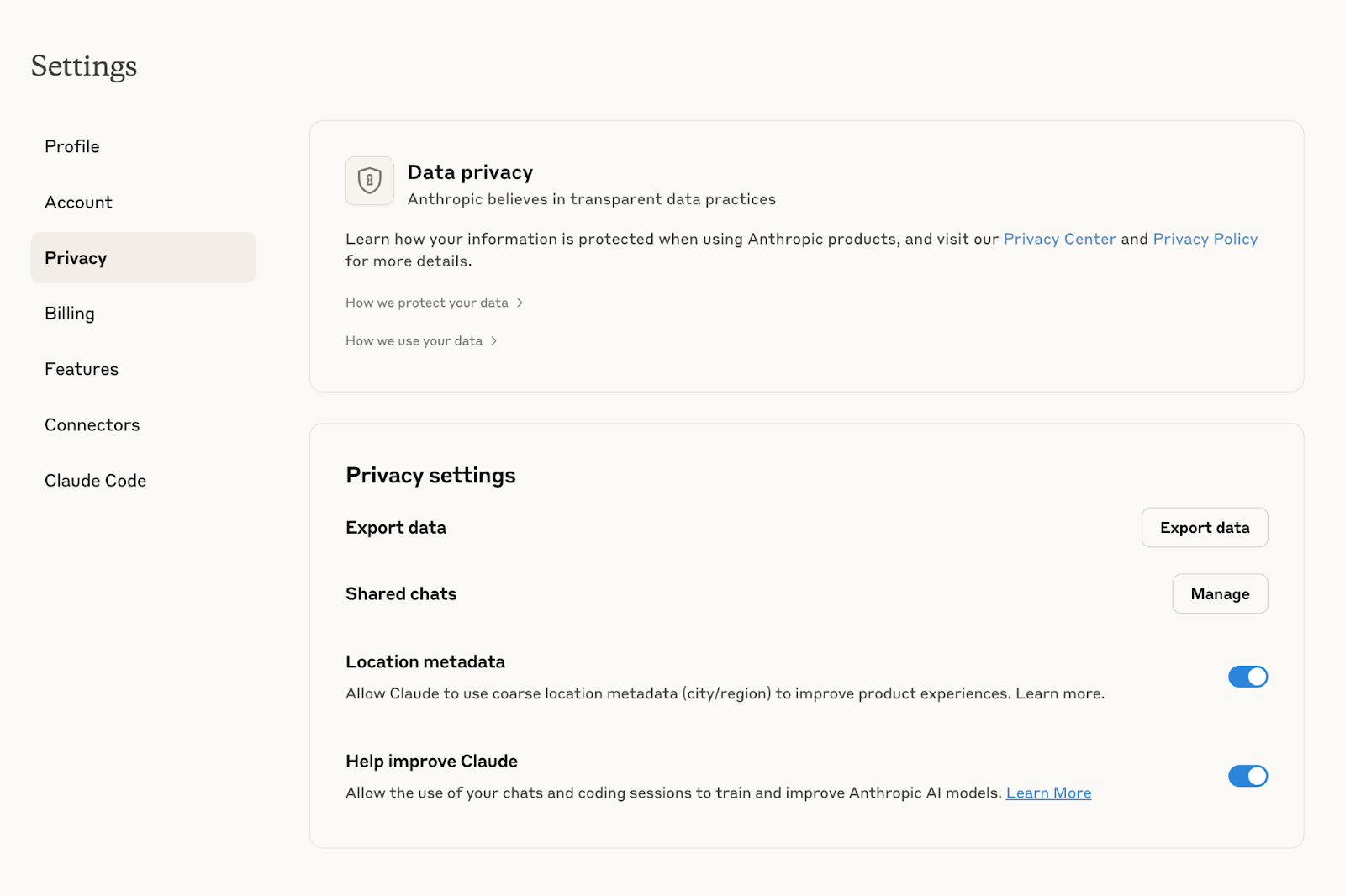

Communicate Anthropic’s updated terms and require employees to disable model training:

Claude Settings > Privacy > Model Training > Toggle Off

Audit for unauthorized installations and block the extension via endpoint or browser management if your policy prohibits AI assistants.

Gain visibility into how Claude and other GenAI tools are being used, and stop sensitive data from being shared, even in shadow or consumer-tier sessions.

Clarify what tools are approved, what settings are required, and how browser-based GenAI usage will be governed moving forward.

Claude’s policy changes are part of a broader trend: shifting from privacy-by-default to privacy-by-choice — and from standalone apps to fully integrated browser agents.

For organizations without real-time observability, this creates a dangerous blind spot. You cannot secure what you cannot see.

MagicMirror gives you control over the GenAI usage you can’t otherwise detect before data is exposed, before policies are violated, and before these changes extend to enterprise tools.

Request a demo to see how MagicMirror helps IT and Security teams: